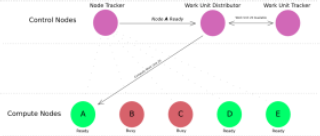

First, have a look at the diagram of the architecture of

Pari-Distributed below.

As you can see, the proper functioning of Pari-Distributed depends on having the Compute or 'listener' nodes available to do computations, and the control nodes available to send instructions, keep track of available nodes, and display output.

Before you can run Pari-Distributed across multiple computers, make sure you set the file ~/.erlang.cookie appropriately on each node. See Official Documentation for an explanation.

First, you'll need to start the Node Tracker. The job of this process is to keep track of which nodes are available, and relay this information to the Work Unit Distributor , or 'dWU' node. To start nodetracker, compile the nodetracker module

and run nodetrack:start(...) with two arguments: a list of 'listener' nodes, and the Distribute Work Unit node. For example,

The node tracker will now start attempting to contact the listener nodes you've specified, relaying information to the Distribute Work Unit node you've specified. Before starting the listener node on each host, it is important to name it appropriately. In the example above, nodetracker expects the nodes to be named "first" , "second" "third" , and dWUnode, and to be running on hosts "localhost" , "Gondor" , "myHost" , and "localhost" , respectively. To start the node "first@localhost", you should start the erlang shell with:

executed on host "localhost" (or, the same host that the nodetracker process is running on).

Once you've got the Erlang shell running under the appropriate name, it's time to start a listener node. Compile the main module for Pari-Distributed, localmessages:

and start the listener node:

Within a few seconds of being started, each listener node should start receiving messages from the nodetracker, querying the node's availability.

Finally, it's time to start the Work Unit Distributor, and assign computations to the listener nodes. Start up another Erlang shell, compile the localmessages module:

and run the start_talk function,

where StartPoint and EndPoint are integers representing the start and end of the range of values you'll be running a for loop over in the Pari/GP calculation, WorkUnitSize is an integer representing the number of index values each node will work on at a time, and IndexTrackerNode is the name of the process running the index tracker (as of now this is the same node you're running start_talk on, e.g. mytalknode@localhost).

For example, to run have your listener nodes compute some loop over the range of 1000 to 8500 in chunks of 100, you would use the command:

With talknode@localhost being the node responsible for running the index tracker, which, for now, is automatically the same host you'd be executing the start_talk(..) command above on.

When you're done running one or several successive start_talk(...) iterations, you'll probably want to kill the nodetracker process, and the listener nodes. You can do this with a single command, namely:

Where Node_Tracker_Node is the address of the node you instantiated nodetracker on. For example, if it is running under the Erlang shell named "NodeTracking@myHostName", execute:

which will kill the nodetracker and all listener nodes.

Let's take a simple example where we're running P-D on two nodes, whose hostnames are Gondor and Isengard. We'll run the control nodes on Gondor (we're assuming they don't suck up too many CPU cycles), and we'll run one listener node on Gondor and Isengard each. First, we start nodetracker on Gondor:

We've just started an Erlang shell, named 'nodetracker' , on which we will run the nodetracker process like so:

The nodetracker will immediately begin attempting to contact the two listener nodes (firstListener@Gondor and secondListener@Isengard) we've passed in as arguments. It will start printing output like so:

You might notice that it complains about timing out waiting for a response from the listener nodes. This is fine, because we haven't started them yet. We will do so now, from another terminal on host Gondor, like so:

A second or two after running 'localmessages:start_listen().', you should start start seeing output indicating that your listener node is receiving communications from the nodetracker. If you check the nodetracker process, it should now start printing lines saying "Confirmed that node: firstListener@Gondor is alive."

Next, start the second listener process on host Isengard in the exact same fashion:

Finally, we can start the process to distribute work nodes to the two listener nodes, like so:

You should now, finally, start receiving the results of your desired computations from your compute nodes. In this case, we are using the default gp_input.gp file which tells the nodes to look for prime numbers within a certain range. The output will look something like this: